Section: New Results

Interaction Techniques

Participants : Caroline Appert, Michel Beaudouin-Lafon, David Bonnet, Anastasia Bezerianos, Olivier Chapuis [correspondant] , Cédric Fleury, Stéphane Huot, Can Liu, Wendy Mackay, Halla Olafsdottir, Cyprien Pindat, Theophanis Tsandilas.

We explore interaction techniques in a variety of contexts, including individual interaction techniques on different display surfaces that range from mobile devices to very large wall-sized displays through standard desktop and tabletops. This year, we investigated how people can use different body parts and limbs to convey information to interactive systems. BodyScape provides a framework for analysing and designing interaction techniques that involve the entire human body. Both WristPointing, which overcomes the limited range of motion of the wrist, and HeadPad, which takes the user's head orientation into account, are whole body techniques that facilitate target acquisition. Arpege can interpret a wide range of chord gestures, designed according to the range of motion and limitations of the human hand, and includes a dynamic guide with integrated feedforward/feedback to enhance learning by novices, without slowing down experts. On mobile devices, we designed novel interaction techniques that increase the expressivity of gestures by a single finger, including ThumbRock, based on movement dynamics, SidePress, which senses pressure on the device, and Powerup, which detects proximity. We also continued to develop advanced interactive visualization techniques, including Gimlenses, which supports focus+context representations for navigating within 3D scenes.

BodyScape – The entire human body plays a central role in interaction. The BodyScape design space [34] (honorable mention at CHI 2013) explores the relationship between users and their environment, specifically how different body parts enhance or restrict movement for specific interactions. BodyScape can be used to analyze existing techniques or suggest new ones. In particular, we used it to design and compare two free-hand techniques, on-body touch and mid-air pointing, first separately, then combined. We found that touching the torso is faster than touching the lower legs, since it affects the user's balance; and touching targets on the dominant arm is slower than targets on the torso because the user must compensate for the applied force.

HeadPad – Rich interaction with high-resolution wall displays is not limited to remotely pointing at targets. Other relevant types of interaction include virtual navigation, text entry, and direct manipulation of control widgets. However, most techniques for remotely acquiring targets with high precision have studied remote pointing in isolation, focusing on pointing efficiency and ignoring the need to support these other types of interaction. We investigated high-precision pointing techniques capable of acquiring targets as small as 4 millimeters on a 5.5 meters wide display while leaving up to 93 of a typical tablet device's screen space available for task-specific widgets [27] . We compared these techniques to state-of-the-art distant pointing techniques and have shown that two of our techniques, a purely relative one and one that uses head orientation, perform as well or better than the best pointing-only input techniques while using a fraction of the interaction resources.

WristPointing – Wrist movements are physically constrained and take place within a small range around the hand's rest position. We explored pointing techniques that deal with the physical constraints of the wrist and extend the range of its input without making use of explicit mode-switching mechanisms [33] . Taking into account elastic properties of the human joints, we investigated designs based on rate control. In addition to pure rate control, we examine a hybrid technique that combines position and rate-control and a technique that applies non-uniform position-control mappings. Our experimental results suggest that rate control is particularly effective under low-precision input and long target distances. Hybrid and non-uniform position-control mappings, on the other hand, result in higher precision and become more effective as input precision increases.

Arpege – While multi-touch input has become a standard for interacting with devices equipped with a touchscreen with simple techniques like pinch-to-zoom, the number of gestures systems are able to interpret remains rather small. Arpège [23] is a progressive multitouch input technique for learning chords, as well as a robust recognizer and guidelines for building large chord vocabularies. We conducted two experiments to evaluate our approach. Experiment one validated our design guidelines and suggests implications for designing vocabularies, i.e. users prefer relaxed to tense chords, chords with fewer fingers and chords with fewer tense fingers. Experiment two demonstrated that users can learn and remember a large chord vocabulary with both Arpège and cheat sheets, and Arpège encourages the creation of effective mnemonics.

ThumbRock – Compared with mouse-based interaction on a desktop interface, touch-based interaction on a mobile device is quite limited: most applications only support tapping and dragging to perform simple gestures. Finger rolling provides an alternative to tapping but uses a recognition process that relies on either per-user calibration, explicit delimiters or extra hardware, making it difficult to integrate into current touch-based mobile devices. We introduce ThumbRock [19] , a ready-to-use micro gesture that consists in rolling the thumb back and forth on the touchscreen. Our algorithm recognizes ThumbRocks with more than 96% accuracy without calibration nor explicit delimiter by analyzing the data provided by the touch screen with a low computational cost. The full trace of the gesture is analyzed incrementally to ensure compatibility with other events and to support real-time feedback. This also makes it possible to create a continuous control space as we illustrate with our MicroSlider, a 1D slider manipulated with thumb rolling gestures.

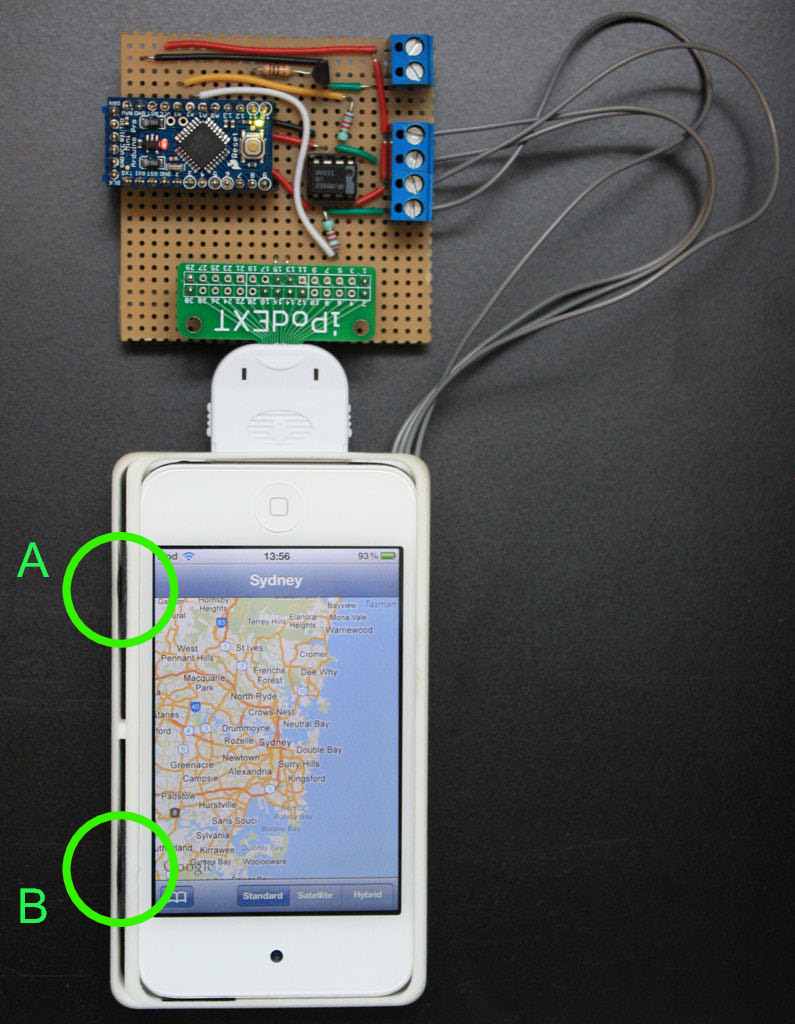

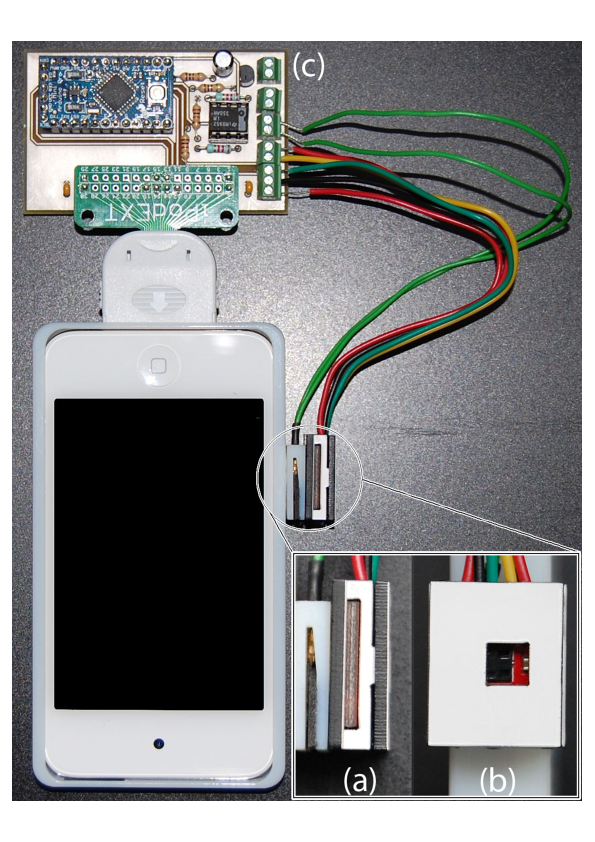

SidePress – Virtual navigation on a mobile touchscreen is usually performed using finger gestures: drag and flick to scroll or pan, pinch to zoom. While easy to learn and perform, these gestures cause significant occlusion of the display. They also require users to explicitly switch between navigation mode and edit mode to either change the viewport's position in the document, or manipulate the actual content displayed in that viewport, respectively. SidePress [31] augments mobile devices with two continuous pressure sensors co-located on one of their sides (Figure 9 -(Left)). It provides users with generic bidirectional navigation capabilities at different levels of granularity, all seamlessly integrated to act as an alternative to traditional navigation techniques, including scrollbars, drag-and-flick, or pinch-to-zoom. We built a functional hardware prototype and developed an interaction vocabulary for different applications. We conducted two laboratory studies. The first one showed that users can precisely and efficiently control SidePress; the second, that SidePress can be more efficient than drag-and-flick touch gestures when scrolling large documents.

Powerup – Current technology like Arduino (http://arduino.cc/ ) opens a large space for designing new electronic device. We built the Power-up Button [30] by combining both pressure and proximity sensing to enable gestural interaction with one thumb (Figure 9 -(Right)). Combined with a gesture recognizer that takes the hand's anatomy into account, the Power-up Button can recognize six different mid-air gestures performed on the side of a mobile device. This gives it, for instance, enough expressive power to provide full one-handed control of interface widgets displayed on screen. This technology can complement touch input, and can be particularly useful when interacting eyes-free. It also opens up a larger design space for widget organization on screen: the button enables a more compact layout of interface components than what touch input alone would allow. This can be useful when, e.g., filling the numerous fields of a long Web form, or for very small devices.

|

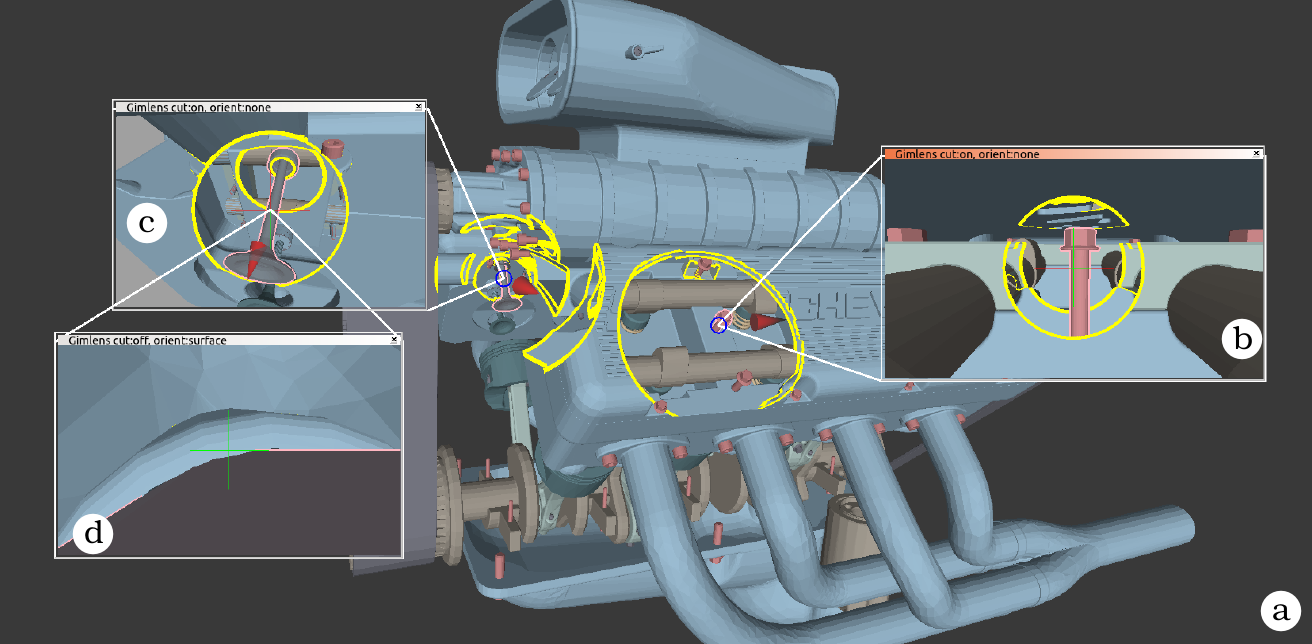

Gimlenses – Complex 3D virtual scenes such as CAD models of airplanes and representations of the human body are notoriously hard to visualize. Those models are made of many parts, pieces and layers of varying size, that partially occlude or even fully surround one another. Gimlenses [28] provides a multi-view, detail-in-context visualization technique that enables users to navigate complex 3D models by interactively drilling holes into their outer layers to reveal objects that are buried, possibly deep, into the scene (see Figure 10 ). These holes are constantly adjusted so as to guarantee the visibility of objects of interest from the parent view. Gimlenses can be cascaded and constrained with respect to one another, providing synchronized, complementary viewpoints on the scene. Gimlenses enable users to quickly identify elements of interest, get detailed views of those elements, relate them, and put them in a broader spatial context.

|

Dashboard Exploration – Visual stories help us communicate knowledge, share and interpret experiences and have become a focus in visualization research in recent years. In this paper we discuss the use of storytelling in Business Intelligence (BI) analysis [21] (Best Paper Award). We derive the actual practices in creating and sharing BI stories from in-depth interviews with expert BI analysts (both story “creators” and “readers”). These interviews revealed the need to extend current BI visual analysis applications to enable storytelling, as well as new requirements related to BI visual storytelling. Based on these requirements we designed and implemented a storytelling prototype tool with appropriate interaction techniques, that is integrated in an analysis tool used by our experts, and allows easy transition from analysis to story creation and sharing. We report experts’ recommendations and reactions to the use of the prototype to create stories, as well as novices’ reactions to reading these stories.

Hybrid-Image Visualizations – Data analysis scenarios often incorporate one or more displays with sufficiently large size and resolution to be comfortably viewed by different people from various distances. Hybrid-image visualizations [15] blend two different visual representations into a single static view, such that each representation can be perceived at a different viewing distance. They can thus be used to enhance overview tasks from a distance and detail-in-context tasks when standing close to the display. Viewers interact implicitly with these visualizations by walking around the space. By taking advantage of humans’ perceptual capabilities, hybrid-image visualizations show different content to viewers depending on their placement, without requiring tracking of viewers in front of a display. Moreover, because hybrid-images use a perception-based blending approach, visualizations intended for different distances can each utilize the entire display.

Evolutionary Visual Exploration – In a high-dimensionality context, the visual exploration of information is challenging, as viewers are often faced with a large space of alternative views on the data. We present [14] , a system that combines visual analytics with stochastic optimization to aid the exploration of multidimensional datasets characterized by a large number of possible views or projections. Starting from dimensions whose values are automatically calculated by a PCA, an interactive evolutionary algorithm progressively builds (or evolves) non-trivial viewpoints in the form of linear and non-linear dimension combinations, to help users discover new interesting views and relationships in their data. The system calibrates a fitness function (optimized by the evolutionary algorithm) to take into account the user interactions to calculate and propose new views. Our method leverages automatic tools to detect interesting visual features and human interpretation to derive meaning, validate the findings and guide the exploration without having to grasp advanced statistical concepts. Our prototype was evaluated through an observational study with five domain experts, and helped them quantify qualitative hypotheses, try out different scenarios to dynamically transform their data, and to better formulate their research questions and build new hypotheses for further investigation.